Part 1: About our audit

1.1

The Primary Health Care Strategy (the Strategy) was launched in February 2001 as an essential step toward achieving the New Zealand Health Strategy. The Ministry of Health (the Ministry) is responsible for ensuring that the Strategy is carried out, which includes monitoring and reporting progress and using the information it collects to inform its decision-making. We audited the Ministry’s role in monitoring the Strategy’s progress.

1.2

In this Part, we discuss:

The focus of our audit

1.3

A core purpose of performance reporting is to enable public accountability for the responsible use of public resources. This includes demonstrating that public services are being delivered effectively and efficiently. As well as their external accountability purpose, performance reports should reflect good management practices. Such practices involve clearly articulating a strategy, linking that strategy to operational and other business plans, monitoring the delivery of operational and business plans, and evaluating the strategy’s effects.

1.4

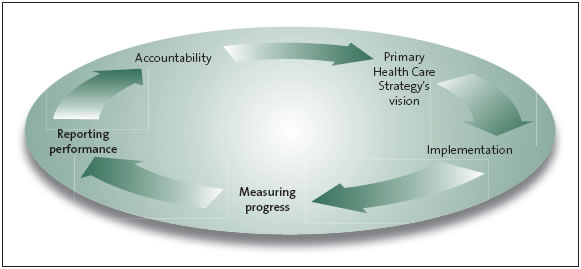

Figure 1 sets out a simple performance management cycle. This starts with a clear set of strategic goals (in this case, the Strategy’s vision statement) that are fulfilled through projects or programmes. The projects’ or programmes’ progress against the strategic goals is then measured, and the total performance is evaluated and reported on for accountability purposes.

1.5

Our audit focused on the “measuring progress” and “reporting performance” parts of the cycle (see Figure 1). We also audited whether the Ministry could show that it was using the information it collected to maintain progress and changing the Strategy’s implementation (where needed) to ensure that the Strategy would be successful.

1.6

We had several reasons for auditing the Ministry’s monitoring of progress against the Strategy’s goals:

- The Strategy’s five- to ten-year implementation period was an essential step in achieving the New Zealand Health Strategy. Measuring progress and reporting performance is important for ensuring that the Strategy is on schedule, so the Government’s wider health aims will be achieved.

- The Strategy required significant changes to primary health care structures and funding methods. When making major structural changes in any sector, it is important that the changes result in the expected improvements – in this case, improved primary health care services that improve individual and population health.

- Ineffective progress monitoring could lead to individuals and population groups waiting longer than necessary to have more accessible and better quality primary health services and care.

- Significant extra expenditure has been used to carry out the Strategy. Those who manage the funding or are responsible for monitoring expenditure must be accountable for reporting the value gained – or advising when gains can be expected.

Figure 1

A performance management cycle

A sound framework for carrying out any strategy will use a performance management cycle.

Source: Adapted from International Organization of Supreme Audit Institutions Working Group on Environmental Auditing (2004), Sustainable Development: The Role of Supreme Audit Institutions, ISSAI 5130.

Our audit criteria

1.7

We consulted the Ministry in detail about the focus of our audit and our audit criteria. The Ministry supported the audit’s focus and helped to develop, and commented on, our preliminary and final set of criteria.

1.8

We audited whether the Ministry was collecting and reporting information to assess the Strategy’s progress. We expected the Ministry to have a framework that allowed it to:

- set measures for each of the Strategy’s goals;

- report whether progress against the measures was meeting expectations, including whether progress was on schedule; and

- use the information collected to maintain progress and ensure that the Strategy’s goals would be achieved.

1.9

We use “measures” as a collective term for the methods available to the Ministry to monitor and judge progress against the Strategy’s goals, such as written narrative reporting, targets, milestones, indicators, results, and inputs. The Strategy’s goals contain outcomes, and we expected the Ministry to have prepared measures for those outcomes. We expected the Ministry’s measures to include a baseline or starting position to judge progress against.

1.10

Below the level of the Strategy’s goals, measures could be useful for monitoring its implementation, such as its Six Key Directions and Five Priorities for Early Action. This could involve a commitment to publish annual (or biennial) reports about the progress being made on each direction and priority. The reports could describe any projects or programmes under way or proposed and their timelines, and highlight matters that help or hinder progress.

1.11

We do not have a prescriptive view about what measures the Ministry should have. It is for the Ministry, not us, to choose suitable measures for each level of monitoring. However, we expected measures to be set for all the Strategy’s goals.

1.12

We do not necessarily expect the Ministry to have these examples, but measures could have been set for:

- phasing in the new funding;

- involving X% of practising General Practitioners (GPs) in primary health organisations (PHOs) within Y years;

- enrolling X% of high-needs patients within Y years;

- demonstrating community involvement with PHOs;

- demonstrating the involvement of a wider range of health professionals providing services to enrolled patients;

- showing that high-priority groups of patients have better care co-ordination; or

- reporting on critical projects to show that PHOs have changed the way they deliver services to focus on population health.

1.13

We expected the Ministry to have a well-designed set of measures and, where possible, to use existing and reliable measures. For example, the Ministry could have used some of the data used to produce The Future Shape of Primary Health Care (see paragraphs 2.4 and 2.5).

1.14

We expected the Ministry’s measures to cover the full breadth of the Strategy’s goals, because this would help to avoid concentrating measures – and therefore the attention of district health boards (DHBs) and PHOs – in one area. Concentrated measures could result in little or no change occurring in other areas. We also expected that the aspects measured in each area might be modified as the Strategy is carried out.

1.15

We did not expect the Ministry to necessarily have a measure for every activity. We expected the Ministry to have been selective, and considered the cost-effectiveness of collecting and reporting information when setting measures.

1.16

Reporting against a broad range of measures would enable the Ministry to highlight good progress and practices, study areas of slow progress, and address any problem areas. Being able to report and celebrate achievements also helps to encourage and support further changes.

1.17

Being clear about measures sets the direction for everyone with a role in fulfilling the Strategy. Measures help to identify priorities, clarify roles, and support purposeful progress towards goals. They allow people and organisations to provide leadership at all levels within the health and disability system, even though each party has its own specific role. Not enough or inadequate measures increase the risk of piecemeal change, which would result in isolated or sporadic reporting of improvements. Isolated improvements may be worthwhile on their own, but their value in showing the Strategy’s progress is limited.

1.18

Care in setting short-term and medium-term measures is especially important when changes can take years to show up in health statistics for the wider population. It would be unrealistic to expect improvements in some national population health measures within two to three years if progress is not likely to be visible until after 10 or more years.

What we did not audit

1.19

We did not audit whether the Strategy’s goals are being achieved or if the Strategy is producing value for money. Our focus was on whether the Ministry is collecting and reporting information about the Strategy’s progress that would help Parliament and the public to judge such matters.

1.20

We did not audit the primary health care funding or the financial information reproduced in our report because it was not necessary for examining the Ministry’s monitoring of the Strategy’s progress.

Our sources of evidence

1.21

We collected evidence for our audit by interviewing current Ministry employees (and a past employee with extensive knowledge about the Strategy) and employees of District Health Boards New Zealand,1 which is involved in the PHO Performance Programme2 on behalf of DHBs.

1.22

The Ministry provided us with documents, including evaluation reports, internal documents, and reports to Cabinet, Parliament, and Ministers of Health. We also used information on websites belonging to the Ministry and District Health Boards New Zealand, and links provided from those sites.

1: District Health Boards New Zealand was formed by all 21 DHBs in December 2000 to co-ordinate their activities on selected issues.

2: The PHO Performance Programme was previously the PHO Performance Management Programme.

page top